AIAAIC Alert #35

AI scraper bot transparency; Olympic surveillance fears and malicious deepfakes; Elon Musk's Grok chatbot gets spicy; AI risks and harms; 2024 user suvey

#35 | August 2, 2024

Keep up with AIAAIC via our Website | X/Twitter | LinkedIn

Shedding light on scraper bots

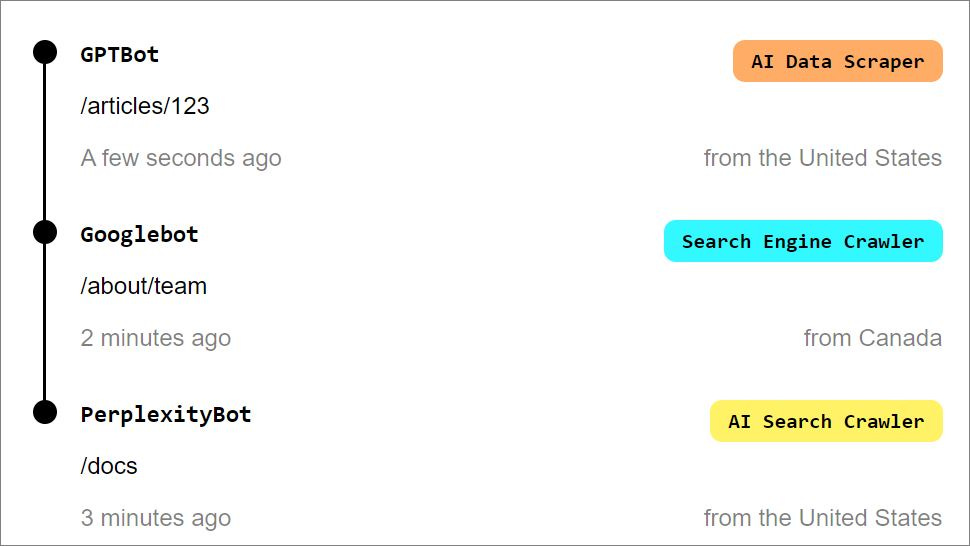

Image: Dark Visitors

Author: Charlie Pownall, AIAAIC founder and managing editor

In the last few days, Anthropic's ClaudeBot visited technology advice site iFixit.com a million or so times over a 24-hour period, gobbling its content, driving its IT team to distraction, and reportedly costing it over USD 5,000 in bandwidth charges.

A notoriously opaque and mostly unregulated set of conventions and techniques, web scraping underpins many business models, from online price comparison and product review engines to reputation monitoring and website change detection.

It is technically possible to prevent - or at least minimise - web scraping, but its legality remains unclear in many parts of the world and the enforceability of website terms has proved tricky.

However, the problem has taken greater prominence as AI companies race to develop more advanced language models and tools, turning to large-scale web scraping to gather the massive amounts of data needed to train their systems.

One of the main points of contention is the circumvention of robots.txt., which allows website owners to specify which parts of their sites can be crawled by bots. But OpenAI, Voyager Labs and other AI companies appear to be ignoring this protocol to train and power their systems.

Quite how they do this remains open to question, but Perpexity AI seems to have used third-party crawlers to bypass robots.txt and plagiarise the content of Bloomberg, Forbes and other publishers in order to create AI-generated summaries.

The implications are far-reaching. Publishers view unrestricted scraping of their content as a deliberate attempt to undermine their ability to monetise their work and sustain quality journalism. They also fear it damages the industry as a whole and will put journalists, designers and others out of work.

Fortunately, some of the smarter AI companies seem to realise that they cannot continue looting others’ content ad infinitum.

AI scraping lawsuits (eg. OpenAI, VoyagerLabs) are on the rise, politicians and regulators are on the prowl, data licensing deals are being carved out, and entities such as Dark Visitors (pictured above) are actively exposing scraper bots, whilst equipping organisations with the tools to protect their assets.

As the AI industry continues to grow, the debate over web scraping is likely to intensify and the outcome of legal challenges, such as the 2023 suit against OpenAI in California, may set important precedents for how data collection for AI training is regulated in the future.

Clearly, finding a balance between fostering AI innovation and protecting content creators' rights is necessary.

But the AI industry has done - and in many instances continues - to do itself no favours when it comes to crawling and scraping. The sun is shining where Anthropic, OpenAI et al don’t want it to, casting light not just on a murky practice but on their own business models, the viability of which are increasingly being questioned.

In the crosshairs … in France

AI and algorithmic incidents hitting the headlines

Image: Maxim Hopman, Unsplash

Paris Olympics AI scans fuel surveillance fears

Including allegations of "creeping surveillance" that threaten fundamental rights such as privacy and freedom of expression and could lead to a chilling effect on public behaviour.French court rules City of Orleans use of AI is illegal

The court rejected (pdf) the city's argument that no personal data was being processed in its AI-powered audio surveillance programme, stating that the microphones linked to cameras collect information that could identify individuals.Deepfake France 24 journalist calls Seine water 'unsafe'

Use of the Seine for Olympic events have had something of an on and off feel, not helped by deepfakes muddying the already rather murky water.Google fined for training Gemini on news content without consent

Understood to be the world’s first fine levied against AI chatbot developers. Unusually, Google’s nemesis was on this occasion France’s competition authority.France welfare fraud detection algorithm accused of exacerbating inequality

Investigators concluded the algorithm's criteria were poorly constructed, leading to arbitrary and potentially unfair flagging of individuals based on minor changes in behaviour.French national police accused of illegally using facial recognition

Prompting concerns about mass surveillance and infringements on individual liberties and privacy, with France's police and the Ministry of the Interior accused of opacity and inadequate accountability.French privacy watchdog fines Clearview AI for violating privacy

Clearview AI faced similar penalties in other countries, including the UK, Italy, and Greece. Despite these fines, Clearview AI refused to pay, asserting that it is not subject to EU privacy laws as it claimed to have no clients or operations in the EU.

Visit the AIAAIC Repository for details of these and 1,600+ other AI, algorithmic and automation-driven incidents and controversies.

Report an incident or controversy.

Grok chatbot

Featured system/dataset

Image: X Corp

Grok is an AI chatbot developed by Elon Musk's X Corp. It is seen as Musk's response to OpenAI's ChatGPT, which the entrepreneur is known to consider overly politically correct.

Grok can access and process current information from the X (formerly Twitter) platform, allowing it to provide up-to-date responses, and aims to be informative and entertaining in its interactions. Like other AI models, Grok is designed to improve its performance based on user interactions and feedback.

It is currently restricted to users of X Premium+ subscribers in the US and Canada.

The yang: Grok is seen to benefit from real-time news and information drawn from the X platform, allowing it to provide up-to-date responses and knowledge about recent events. It can also handle multiple queries and tasks simultaneously, allowing users to switch between different requests seamlessly.

Unlike some other AI chatbots, Grok is designed to address "spicy" or controversial subjects that others might avoid, and is designed to have a "rebellious streak" and generate “witty” responses to make conversations more “enjoyable”.

The yin: Drawing real-time on X, it should come as little surprise that Grok regularly generates biased, offensive and dangerous information, and regurgitates and spits out misinformation and disinformation. It is also thought to potentially violate copyright and privacy.

Incidents and issues associated with Grok:

Grok posts incorrect information about Trump assassination attempt

Grok falsely claims Indian PM Modi "ejected" from government

Report incidents and issues associated with Grok.

Research/advocacy citations, mentions

Danyk Y. An Approach to the Formation of Artificial Intelligence Development Threat Indicators

Reuel A. et al. Open Problems in Technical AI Governance

Batool A., Zowghi D., Bano M. AI Governance: A Systematic Literature Review

Bogucka E. et al. The Atlas of AI Incidents in Mobile Computing: Visualizing the Risks and Benefits of AI Gone Mobile

Sayre M.A., Glover K. Machines Make Mistakes Too: Planning for AI Liability in Contracting

Tan J. Introducing the AI Risk Series (pdf)

Explore more research reports citing or mentioning AIAAIC.

AIAAIC news

Allegra Grunberg joins the AIAAIC family

An operations specialist focused on analysing and optimising governance systems and managing cross-functional teams, Allegra Grunberg is a founding member of The Decision Lab and ContinuTech LCT. She is also a keen human rights advocate who majored in Political Science at Brandeis University and worked on Capitol Hill, in New York Mayor Bill de Blasio's office, and for the Fire Department of New York headquarters. Her hobbies are filmmaking, horseback riding, and reading about new discoveries in cosmology and physics. Allegra will help AIAAIC in its organisational planning and execution.

AIAAIC founder talks harms at the University of Cambridge

Charlie Pownall spoke to Cambridge university AI ethics masters students on the nature of AI harms, why and how harms are different to risks, why AI developers and operators prefer to disclose risks over harms, and highlighted AIAAIC’s new harms taxonomy. | View/download the slides (pdf)

2024 user survey

We work hard to provide useful, free data for individuals and organisations keen to know more about the risks and harms of AI and related technologies. To do this effectively, we need to understand what you think about AIAAIC - what we’re doing well, where we can improve and what we should focus on going forward. Our survey will take at most 5 minutes, and your feedback will be invaluable. | Take AIAAIC’s 2024 user survey