AIAAIC in 2024

What we got up to over the last twelve months

Dear AIAAIC community member,

Following years of heated warnings from tech doomers, catastrophists, existentialists and effective altruists, 2024 2023 2022 could have been the year AI was going to derail elections, trigger a financial meltdown and be used to plan bioweapons attacks. It was not to be.

Is AI’s bark worse than its bite?

Human extinction avoided for now, consumers reacted furiously to massive over-charging by opaque AI-powered dynamic pricing systems, health insurers stood accused of using trade-secrecy protected algorithms to deny care to elderly and disabled citizens, and tech firms started to dislocate chop jobs thanks to their new-found productivity and efficiency.

Deepfake (aka synthetic media) scams went mainstream, as did AI porn attacks, mostly on women and students. Users of companion chatbots committed suicide. Generative AI systems continued to hallucinate extensively, in some cases dangerously; one even managed to falsely accuse a US attorney of committing murder.

Under pressure to feed their systems and their investors’ wallets, AI companies engaged in industrial-scale looting of others’ IP - including that of their peers and competitors, who looked askance the other way - to train their generative systems.

More tangibly, Israel used AI extensively in Gaza and Lebanon to target bomb victims while conveniently ignoring massive collateral damage. Meantime, Ukraine launched - successfully, it is said - the world’s first robot-only, combined arms assault against Russian troops.

And vast new data centres started appearing in all corners of the world to underpin the continued technological and financial growth - not to say political muscle - of system developers and deployers. Which is apparently a price worth paying for the increasingly visible ravages of climate change.

Transparency and accountability washing

Sure, there’s plenty of talk of transparency and accountability, much of it driven by impending legislation such as the EU’s AI Act. In this context, there’s been some progress by developers of generative AI systems.

And yet expediency in the form of misleading marketing and communication remains rampant - something US authorities are now clamping down on. And fear of legal, financial and reputational exposure means Anthropic, Mistral, OpenAI, Stability AI and other entities continue to withhold information about the exact data used to train their systems and refuse to spell out the actual harms, including the environmental impacts, they are inflicting.

Equally, it comes as little surprise that developers and deployers are resorting to using a panoply of questionable and disproportionate quasi-legal tools to restrict access to data, code, models and other technical and corporate decision-making information.

Which is where AIAAIC fits in.

What we did and achieved in 2024

Added over 650 entries detailing the misuse of AI, algorithmic and automation systems to our incident repository (sheet)

Significantly grew our Wikipedia-style knowledge base of associated systems (example) and datasets (example)

Trialed working partnerships with three universities: Trinity College, Dublin; The University of Thessaloniki and the University of Pavia

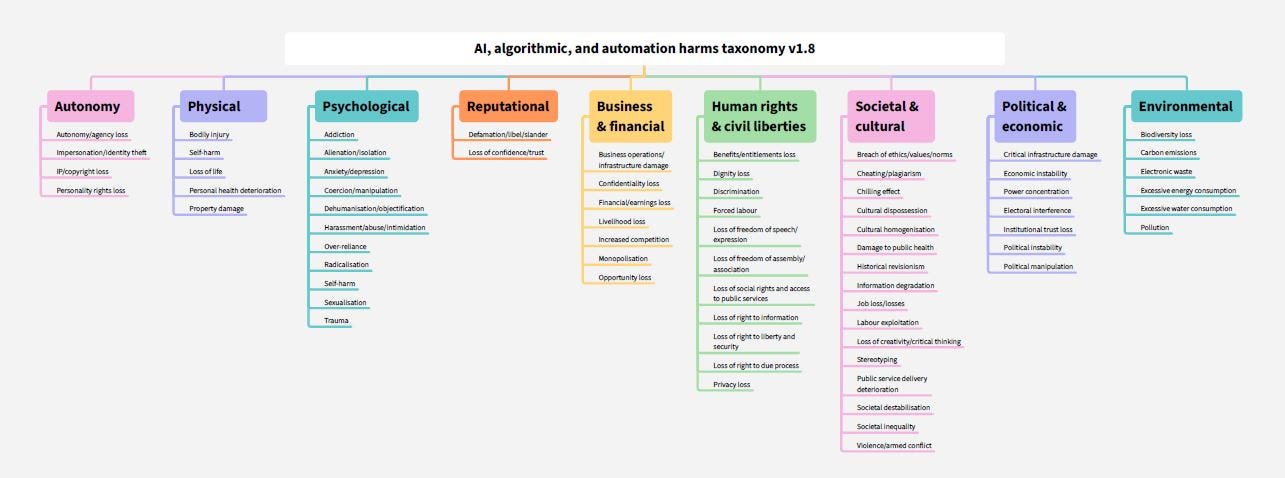

Worked with recognised experts in education, psychology, human rights, politics, economics, warfare, environment and other domains to develop and refine our human-centred harms taxonomy

Published our first research paper

Developed a new user guide for the AIAAIC Repository

Strengthened our core governance, including creating and rolling out our Values and Code of conduct across our community

Overhauled our volunteer application process, and

Launched a dedicated Slack channel for our community members.

So what?

AIAAIC was cited and referenced in research reports and studies by and for the IMF, G20, UNESCO, the United Nations Interregional Crime and Justice Research Institute, the International Committee of the Red Cross, Innovate UK and many other organisations, researchers, academics, NGOs and businesses

Our work was also mentioned in high-profile shareholder campaigns aimed at forcing Apple, Disney, Paramount and others to publish annual AI transparency reports and ethical guidelines

A growing number of highly qualified and motivated volunteers got involved with us and/or continued to be involved with us in various capacities, from data collection and management to editorial, analytics, marketing and technology infrastructure

Members of the UN High-Level Advisory Board on AI, UK House of Lords and European University Institute provided us with strategic and operational advice

The number of users of our website (re-)doubled and newsletter traffic and subscriptions grew significantly

Individual donations covered our operating costs.

We intend to build on this platform during 2025 and will share an outline of our plans shortly.

Your support is critical to keeping us going

A public interest initiative, AIAAIC is run - for free - by a diverse community of people passionate about opening up and bringing to heel the cosy world of technological innovation-at-any-cost.

To do this, we are - and remain wholly committed to being - independent. In this regard, we take seriously actual or potential conflicts of interest and do not accept funding or in-kind donations from commercial entities.

We are also commited to being technologically and politically non-partisan.

Please join us, tell others, and walk the transparency and accountability talk.

Your support is greatly appreciated.

Thank you,

Charlie Pownall

Founder, AIAAIC

Great summary!! All the best in 2025